WS2010

Neurokognition

Inhalte

Die Veranstaltung führt in die Modellierung neurokognitiver Vorgänge des Gehirns ein. Neurokognition ist ein Forschungsfeld, welches an der Schnittstelle zwischen Psychologie, Neurowissenschaft, Informatik und Physik angesiedelt ist. Es dient zum Verständnis des Gehirns auf der einen Seite und der Entwicklung intelligenter adaptiver Systeme auf der anderen Seite. In der Vorlesung werden zunächst realistische neuronale Modelle und Netzwerkeigenschaften vorgestellt. Auf Basis dieser grundlegenden Mechanismen werden dann umfassende Modelle verschiedener kognitiver Funktionen erläutert. In den Übungen werden die Algorithmen der Vorlesung mittels einer Implementierung in MATLAB vertieft. Kennnisse in MATLAB sind keine Voraussetzung für die Teilnahme, sie können bei Bedarf vor dem Beginn erworben werden. Dafür werden ein Skript zum Selbststudium sowie zwei praktische Übungen in den ersten Vorlesungswochen angeboten.

Ziele: Dieses Modul vermittelt theoretische Konzepte und praktische Erfahrungen zur Entwicklung von Neuro-kognitiven Modellen.

Randbedingungen

Empfohlene Voraussetzungen: Grundkenntnisse Mathematik I bis IV

Prüfung: Mündliche Prüfung, 5 Leistungspunkte

Neu: Aufgrund der hohen Teilnehmeranzahl wird eine zweite Übung ab dem 28.10.2010 angeboten.

Literatur

Dayan, P. & Abbott, L., Theoretical Neuroscience,

MIT Press, 2001.

Syllabus

Introduction

The introduction motivates the goals of the course and basic concepts of models. It further explains why cognitive computational models are useful to understand the brain and why cognitive computational models can lead to a new approach in modeling truly intelligent agents.

Part I Model neurons

Part I describes the basic computational elements in the brain, the neurons. A neuron is already an extremely complicated device. Thus, models at different abstraction levels are introduced.

1.1 The neuron as an electrical circuitBiophysical models of single cells are constructed using electrical circuits composed of resistors, capacitors, and voltage and current sources. This lecture introduces these basic elements.

1.2 Integrate- and fire modelsIntegrate- and fire models model the membrane potential with a ODE, but do not explicitly model the generation of an action potential. The generate an action potential when the membrane potential reaches a particular threshold.

Exercise I.1: Integrate-and-fire model (2 units), Files: iandf.m,

makeie.m, normal.m

Solution I.1 Files: lsg1a.m, lsg1b.m

The membrane potential controls a vast number of nonlinear gates and can vary rapidly over large distances. The equilibrium potential is the result of electrostatic forces and diffusion by thermal energy and can be described by the Nernst and Goldman-Hodgkin-Katz equations.

1.4 Hodgkin and Huxley modelThe Hodgkin-Huxley (H-H) theory of the action potential, formulated 60 years ago, ranks among the most significant conceptual breakthroughs in neuroscience (see Häusser, 2000). We here provide a full explanation of this model and its properties.

Exercise I.2: Hodgkin and Huxley model (3 units), Files: hhsim_v3.1.zip

Solution I.2

The Hodgkin-Huxley model captures important principles of neural action potentials. However, since then several important discoveries have been made. This lecture presents of few important ones including more detailed models.

1.6 SynapsesThe synapse is remarkably complex and involves many simultaneous processes. This lecture explains how to model different (AMPA, NMDA, GABA) synapses and include them in the integrate-and-fire model.

Exercise I.3: Synapses (1 unit), Files: Ansatz3_a

Although it is generally agreed that neurons signal information through sequences of action potentials, the neural code by which information is transfered through the cortex remains elusive. In the cortex, the timing of successive action potentials is highly irregular. This lecture explains under which conditions such firing pattern can be modeled by a poisson process.

As explained in previous lectures the basic transfer from one neuron to another set of neurons relies on action potentials (spikes). However, for several phenomena is it sufficient the model just the spike rate of neurons and not the individual spikes. This lecture introduces to basic rate code models.

Additional Reading: Shadlen MN, Newsome WT. (1994) Noise, neural codes and cortical organization. Curr Opin Neurobiol. 4:569-79.

Part II Learning

2.1 Learning - Neural principlesLearning is a fundamental property of the brain. This lecture introduces into the most important concepts of synaptic plasticity and learning rules.

Exercise II.1: Learning (2 units), Files: Exercise4.zip

Solution II.1

Part III Network Mechanisms

3.1 Lateral and feedback circuits

Important properties in the brain emerge from the interactions between neurons. This lecture provides on overview of properties that appear in local, lateral and feedback circuits.

Exercise III.1: Rate coded neurons (1 unit)

Solution III.1

Additional Reading:

Grossberg, S (1988) Nonlinear Neural Networks: Principles, Mechanisms, and

Architectures. Neural Networks, 1:17-61. (only part 1-15)

Salinas E, Abbott LF (1996) A model of multiplicative neural responses in

parietal cortex. Proc Natl Acad Sci U S A, 93:11956-61.

3.2 Coordinate Transformations

Space can be represented in different coordinate frames, such as eye-centered for early visual processing and head-centered for acoustic stimuli. It appears that the brain relies on different coordinate systems to represent the space in our external environment. Thus, this lecture explains how stimuli can be transformed from one coordinate system to another.

Exercise III.2: Radial Basis Function Networks (1 unit), Files: Exercise6.zip

Solution III.2

Suggested Reading:

Cohen & Andersen (2002). Nature Rev Neurosci 3:553-562.

Pouget, A., and Snyder, L. (2000) Computational approaches to sensorimotor

transformations. Nature Neuroscience. 3:1192-1198.

Deneve S, Latham PE, Pouget A. (2001) Efficient computation and cue integration

with noisy population codes. Nat Neurosci., 4:826-31.

Pouget A, Deneve S, Duhamel JR. (2002) A computational perspective on the neural

basis of multisensory spatial representations. Nat Rev Neurosci., 3:741-7.

Part IV Systems

Perhaps our most important sensory information about our environment is vision. This lecture explains the first pre-cortical processing steps of visual perception.

The first cortical processing of the visual modality takes place in area V1, also called striate cortex. This lecture introduces into the receptive fields of neurons in V1 and explains what kind of information V1 encodes.

Exercise IV.1: Gabor Filter (1 unit), Files: Exercise7.zip

Additional Reading:

Adelson, E. H., Bergen, J R. (1985): Spatiotemporal energy models for the perception of motion.

J. Opt. Soc. Am. A/Vol. 2, No. 2 February 1985, 284-299

Beyond area V1, one pathway in the brain (ventral pathway) is particularly devoted for visual object recognition. This lecture explains the architecture of this pathway and how it implements object recognition.

Exercise IV.2: Object Recognition and HMAX (1 unit), Files: Exercise8.zip

4.4 Attention

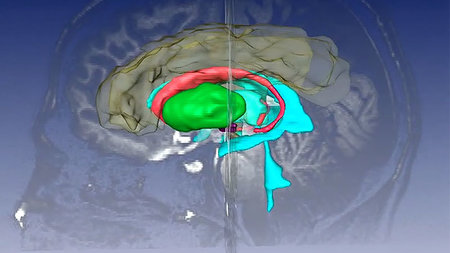

Attention describes mechanisms in the brain that allow to shift processing resources, e.g. for visual attention to process particular locations, features or objects. This lecture introduces into the principles, the neural mechanisms and brain areas involved in visual attention.

Additional Reading:

Itti, L., Koch, C., Niebur, E. (1998): A Model of Saliency-Based Visual Attention for Rapid Scene Analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 20, no. 11, november 1998, 1254-1259

Itti, L., Koch, C. (2000): A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Research 40 (2000) 1489-1506

4.4.4 The systems level of attention

Additional Reading:

Hamker, F. H. (2005) The Reentry Hypothesis: The Putative Interaction of the

Frontal Eye Field, Ventrolateral Prefrontal Cortex, and Areas V4, IT for

Attention and Eye Movement. Cerebral Cortex, 15:431-447.

Hamker, F. H. (2005) The emergence of attention by population-based

inference and its role in distributed processing and cognitive control of

vision. Journal for Computer Vision and Image Understanding, 100:64-106.

Hamker, F. H., Zirnsak, M. (2006) V4 receptive field dynamics as predicted

by a systems-level model of visual attention using feedback from the frontal

eye field. Neural Networks, 19:1371-1382.