Forschungsseminar

Das Forschungsseminar richtet sich an interessierte Studierende des Master- oder Bachelorstudiums. Andere Interessenten sind jedoch jederzeit herzlich willkommen! Die vortragenden Studenten und Mitarbeiter der Professur KI stellen aktuelle forschungsorientierte Themen vor. Vorträge werden in der Regel in Englisch gehalten. Den genauen Termin einzelner Veranstaltungen entnehmen Sie bitte den Ankündigungen auf dieser Seite.

Informationen für Bachelor- und Masterstudenten

Die im Studium enthaltenen Seminarvorträge (das "Hauptseminar" im Studiengang Bachelor-IF/AIF bzw. das "Forschungsseminar" im Master) können im Rahmen dieser Veranstaltung durchgeführt werden. Beide Lehrveranstaltungen (Bachelor-Hauptseminar und Master-Forschungsseminar) haben das Ziel, dass die Teilnehmer selbstständig forschungsrelevantes Wissen erarbeiten und es anschließend im Rahmen eines Vortrages präsentieren. Von den Kandidaten wird ausreichendes Hintergrundwissen erwartet, das in der Regel durch die Teilnahme an den Vorlesungen Neurocomputing (ehem. Maschinelles Lernen) oder Neurokognition (I+II) erworben wird. Die Forschungsthemen stammen typischerweise aus den Bereichen Künstliche Intelligenz, Neurocomputing, Deep Reinforcement Learning, Neurokognition, Neurorobotische und intelligente Agenten in der virtuellen Realität. Andere Themenvorschläge sind aber ebenso herzlich willkommen!Das Seminar wird nach individueller Absprache durchgeführt. Interessierte Studenten können unverbindlich Prof. Hamker kontaktieren, wenn sie ein Interesse haben, bei uns eine der beiden Seminarveranstaltungen abzulegen.

Vergangene Veranstaltungen

Development of a Hierarchical Approach for Multi-Agent Reinforcement Learning in Cooperative and Competitive EnvironmentsRobert Pfeifer Wed, 11. 9. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Multi-Agent Reinforcement Learning (MARL) enables multiple agents to learn purposeful interactions in a shared environment by maximizing their own cumulative rewards. As a subject of current research MARL is getting applied in various fields, such as robotics, traffic control, autonomous vehicles and power grid management. However, these algorithms faces challenges including partial observability, non-stationarity of the environment, coordination among agents and scalability issues when incorporating a multitude of agents. This thesis explores Hierarchical Reinforcement Learning (HRL) as a potential solution to address these challenges, using the Goal-Conditional Framework to decompose complex tasks into simpler sub-tasks, which facilitates better coordination and interpretability of behavior. The Goal-Conditional Framework learns this decomposition automatically in an end-to-end fashion by assigning the respective tasks to a hierarchy of policies. The higher-level policy proposes a goal vector either in a latent state representation or directly in the state space while operating on a potentially slower time scale. The lower-level policy receives the goal as part of its observation space and obtains an intrinsic reward based on how well it achieves the goal state. Only the top-level policy receives the reward signal from the environment. The thesis implements the Multi-Agent Deep Deterministic Policy Gradient (MADDPG) algorithm proposed by (Lowe et al., 2020) and extends it for hierarchical learning using either a centralized or decentralized manager policy. It investigates the performance of this approach in various environments, focusing on diverse learning aspects such as agent coordination, fine-grained agent control, effective exploration and directed long-term behavior. Additionally, the thesis explores the influence of architecture, time horizon and intrinsic reward function on final performance, aiming to gain a deeper understanding of the possibilities and challenges associated with Goal-Conditional hierarchical algorithms. |

Entwicklung von KI-Modellen zur Adressdatenextraktion: Herausforderungen, Methoden und Empfehlungen zur Bewältigung von Vielfalt und KontextfehlernErik Seidel Tue, 27. 8. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Die präzise Erkennung postalischer Adressen stellt eine zentrale Herausforderung dar, insbesondere, wenn Modelle auf die Erkennung anderer Entitäten, wie beispielsweise Personen, trainiert werden. Die dabei entstehenden Konflikte haben Einfluss auf deren Identifikation. Ein Beispiel hierfür ist die Verwechslung bei Namen wie "Professor Richard Paulik" mit "Professor Richard Paulik Ring". Aber auch die Unterscheidung zwischen Personen- und Ortsnamen sind eine Herausforderung. Zentrale Probleme stellen Komplexität, Diversität (Vielfalt) und Ambiguität (Mehrdeutigkeit) der Entitäten dar. Ausreichend annotierte Daten von Adressen oder Personen sind nur schwer zu finden. Die Arbeit untersucht, welche Ansätze sich am besten für die Erkennung von Adressen eignen. Im Fokus stehen Transformer Modelle. Für das Problem der Datenbeschaffung wird der Einsatz synthetisch erstellter Daten betrachtet. Das Ziel ist eine möglichst breite Vielfalt an Beispielsätzen für das Training und die Evaluation zu erreichen. Es werden verschiedene Metriken und Werkzeuge vorgestellt, mit dessen Hilfe sich Modelle und Daten bewerten und optimieren lassen. |

Vergleich und Verifikation von Methoden der eXplainable Artificial Intelligence anhand der Re-Identifikation von PersonenSimon Schulze Tue, 13. 8. 2024, 132 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Die Verifikation von Methoden der eXplainable Artificial Intelligence (XAI) ist unabdingbar, um die Vorhersagen komplexer, undurchsichtiger Modelle des maschinellen Lernens (ML) interpretier- und nachvollziehbar zu machen und die Korrektheit der Erklärungen zu testen. Besonders in kritischen Infrastrukturen ist eine Prüfung der eingesetzten Algorithmen durch XAI-Verfahren notwendig, um das Vertrauen in diese zu stärken und deren Richtigkeit nachzuweisen. Durch die zusätzliche Bestätigung der Korrektheit der XAI-Aussagen wird dies zunehmend untermauert. Im Rahmen dieser Bachelorarbeit werden drei Modelle des ML, eine Support-Vektor-Maschine (SVM), ein Multi-Layer Perzeptron (MLP) und eine Gated Recurrent Unit (GRU), eingesetzt. Eine Anwendung dieser erfolgt auf dem von Smerdov u. a. [SME+20] vorgestellten eSports-Sensor-Datensatz, in welchem zahlreiche biometrische Attribute einzelner Spieler enthalten sind. Mit Hilfe von XAI-Methoden sollen die relevantesten Eigenschaften der einzelnen Spieler analysiert werden. Eingesetzt werden dazu Local Interpretable Model-Agnostic Explanations (LIME), Shapley Werte, Gradients, Gradients-SmoothGrad (Gradients-SG) und Layer-Wise Relevance Propagation (LRP). Deren Aussagen werden mit Hilfe verschiedener Vergleichs- und Verifikationsmetriken - die Größe der Schnittmenge der relevantesten Attribute, die Stabilität von XAI-Methoden, deren deskriptive Genauigkeit, deskriptive Spärlichkeit und maximale Sensitivität - auf ihre Richtigkeit kontrolliert. Es wird gezeigt, dass sich die Aussagen verschiedener XAI-Verfahren unterscheiden und somit eine individuelle Korrektheitsprüfung dieser notwendig ist. Da biometrische Merkmale laut Payne u. a. [PAY+23] unter Umständen einen signifikanten Aufschluss über individuelle Muster und Charakteristiken einzelner Personen geben, besteht die Annahme, dass diese durch XAI-Methoden abgebildet werden können. Schlussendlich erfolgt daher eine Wiedererkennung der Individuen ausschließlich auf Basis der erhaltenen XAI-Ergebnisse. Es kann erfolgreich bestätigt werden, dass dies in einem bestimmten Rahmen möglich ist. |

Investigation of Time Series Based AI Models for Autonomous Driving Function ValidationVincent Kühn Mon, 5. 8. 2024, 1/367 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Bevor autonome Fahrfunktionen freigegeben werden, müssen sie während der Entwicklung ausgiebig getestet werden. Dies geschieht in der Regel in einer Simulationsumgebung, da in den frühen Phasen der Entwicklung keine geeigneten Fahrzeuge zur Verfügung stehen und eine Erprobung in der realen Welt aus verschiedensten Gründen nicht möglich ist. Abhängig von der Simulationsumgebung und den Testwerkzeugen kann die anschließende Bewertung sehr langsam sein. Um diese Testauswertungen zu beschleunigen und effizienter zu gestalten, werden verschiedene moderne Algorithmen zur Zeitreihenanalyse ausgewählt und verglichen. Im Einzelnen handelt es sich um Zeitreihenklassifizierungsmodelle zur schnellen und korrekten Klassifizierung von Testszenarien als bestanden oder nicht bestanden und um Zeitreihenprognosemodelle zur Gewinnung zusätzlicher Erkenntnisse über das zu testende Fahrverhalten. |

IntelEns - a Reinforcement Learning based Approach for automated Time Series ForecastingRobin Wagler Tue, 16. 7. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Creating time series predictions and forecasts is a highly specialised process. Therefore, it is hard to automate it. On the other hand, there is a huge need for such predictions as they can tell information about the future. This thesis proposes a model called Intelligent Ensemble (IntelEns), which should be able to automate the time series forecasting process. IntelEns combines a classical forecasting ensemble with reinforcement learning (RL). The idea is to train different base learners (regression models), which create individual predictions. A RL-agent learns how to combine these individual predictions at every time step based on the actual observation of the time series. The result is an ensemble prediction for every time step. The RL-agent should learn which model to use, when and how intense. As the RL algorithms learn through trial and error, no human supervision is needed, and the forecasting could be automated. This thesis investigates intensively the properties and abilities of the proposed model. A prototype was built using Python. Experiments are done on scientific time series data with good patterns and a real-world dataset from Porsche's aftersales domain. The strengths and weaknesses of the proposed approach are presented, and the question of whether an RL-based ensemble could be used to automate the time series forecasting process will be answered. |

Deep Learning for Recognition of Type Labels on Heating Systems in 3D ScenesGoutham Ravinaidu Thu, 4. 7. 2024, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz This presentation will cover the background and working principles used in tackling the problem statement and present the achieved results. This thesis aims to detect the type labels present on the heating systems from a video sequence and extract the text present on those labels. The various object detection models used to localize the labels, the use of image quality analysis to ensure quality, and the utilization of various OCR models used in extracting the text information. This presentation also shows the results of the completed pipeline of the thesis with the combined use of object detectors, quality control and OCR models. I will finally discuss the results and provide a conclusion. |

Optimizing Neural Network Architectures for Fail-Degraded Computing Scenarios in Automated Driving ApplicationsDiksha Vijesh Maheshwari Fri, 28. 6. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Neural networks (NNs) are commonly used in automated driving applications. With shrinking transistor size for denser integration, integrated circuits become increasingly susceptible to permanent faults. These permanent faults can lead to a complete loss of functionality, which is unacceptable in safety-critical applications. The thesis introduces a robust Fail Degradation-Aware Quantization (FDQ) strategy coupled with sensitivity prediction algorithms to maintain high algorithmic performance in presence of permanent faults. The FDQ method improves the optimization process by incorporating losses for both higher and lower precision to compute optimal quantization step sizes for model parameters and activations. Additionally, the thesis investigates various sensitivity prediction algorithms that identify the critical components of the model, enabling strategic computational remapping of sensitive features to insensitive features to enhance model robustness and accuracy. Experimental results with ResNet18 and VGG16 models on CIFAR-10 and GTSRB dataset showcase the effectiveness of integrating FDQ with sensitivity-driven task remapping in hardware accelerators, significantly improve the robustness of NNs against permanent faults, ensuring sustained functionality and accuracy under adverse conditions. Specifically, at the highest fault injection error rate of 6.20% for the ResNet18 model on CIFAR-10 dataset, the FDQ method along with sensitivity remapping improved the accuracy by 10% from 76.60% to 87.07%. |

Observational Learning: Neue Konzepte und exemplarische Realisierung eines Agenten innerhalb einer virtuellen UmgebungLeon Kolberg Mon, 17. 6. 2024, 1/367a and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Während uns Menschen 'Lernen durch Beobachtung' intuitiv gelingt, ist das programmieren und trainieren von künstlicher Intelligenz teuer und zeitaufwendig. In dieser Arbeit stelle ich Verfahren zum Lernen durch Beobachtung für Künstliche Intelligenzen vor und präsentiere eine eigene exemplarische Implementation mit einem Neuronalen Netz und Q-Learning in einer virtuellen Realität. Die Ergebnisse dieser Arbeit zeigen nicht nur die Machbarkeit von Observational Learning in einer virtuellen Umgebung, sondern sie zeigen auch Vorteile, Nachteile sowie Risiken, die abgewogen werden müssen. |

Funktionsweise des Softwareframeworkes Lava im Vergleich zu ANNarchyAndreas Schadli Thu, 13. 6. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Diese Bachelorarbeit befasst sich mit dem Softwareframework LAVA, das für neuromorphe Hardware, insbesondere den Loihi-Chip von Intel, entwickelt wurde. Um LAVA mit dem Neurosimulator ANNarchy zu vergleichen, wurden verschiedene Experimente durchgeführt. Dabei wurden Beispielsimulationen in beiden Softwares implementiert und anhand folgender Fragen verglichen: Ist es möglich, die Simulationen in beide Softwares zu übertragen? Sind die Simulationsergebnisse identisch oder gibt es Unterschiede? Wie unterscheiden sich die Arten und Weisen, in denen die Simulationen implementiert wurden? Welche Laufzeitunterschiede bestehen? Die Experimente beleuchten die unterschiedlichen Implementierungsstile, und die Ergebnisse zeigen, dass beide Softwares ähnliche Resultate liefern können und dass LAVA deutlich längere Ausführungszeiten als ANNarchy benötigt. |

Investigation of Reward-Guided Plasticity in Recurrent Neural Networks for Working Memory TasksMax Werler Thu, 16. 5. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz A variety of recent works have indicated that Perturbation Learning provides a valid approach to train the recurrent weights of an artificial recurrent neural network. In this context, Miconi (2017) demonstrated how the thereby induced deflections of the excitation of the neurons can be captured based on information that is locally available to synapses in a Hebbian manner and how these can be integrated into a reward-guided weight update rule such that it characterizes a biologically plausible training algorithm that is capable to solve cognitive tasks. However, his learning architecture suffers from a significant flaw that effectively can prohibit learning progress for some random initial conditions and disrupt a successfully converged network towards an error level like it was observed before training. In this thesis, we investigate these scenarios intending to find the underlying network properties that cause this undesired behavior. As low intrinsic activity and imbalance between excitation and inhibition were detected to strongly correlate with these phenomena, related learning rules as well as a different input weight initialization scheme have been proposed and evaluated. While our results show that we were able to enhance the speed as well as reliability of the initial network convergence greatly, the possibility for a sudden deterioration of a temporarily successful network remains existent. |

Comparison of two motor learning models of the basal ganglia and cerebellumChristoph Ruff Thu, 2. 5. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz A model of motor learning, which was developed here on the professorship by Baladron et al [1] will be compared with a motor learning model from Todorov et al [2]. Both models use a model of the basal ganglia (BG) and the cerebellum (CB). The BG chooses a certain action and reaches to that location, while the CB fine-tunes the reached location to come closer to the target or adapt the movement to an altered location. Both models have a different structure and therefore function differently. During the seminar I will give a closer overlook on how these two models differ and what are advantages/disadvantages of them. The tasks with which these two models were trained differ as well. As part of my internship, I trained the model from Baladron et al [1] with the tasks of Todorov et al [2] to see which parameter values and adaptations are necessary to get a similar result and if it even is possible to train the model with these tasks. The results of this implementation will be presented as well. |

Neuromorphic Computing as a Low Power, Minimal Footprint Solution for Dynamical System ControlValentin Forch Thu, 18. 4. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz In cooperation with the Research Center for Materials, Architectures and Integration of Nanomembranes (MAIN) we develop a neural network architecture that will enable the control of autonomous modular micro robots carrying CMOS chiplets. Realizing a neural network controller on this scale poses multiple challenges: the machine must run on a minimal energy budget, minimal memory footprint, and can only be build on top of a low-level instruction set. Further, these machines should in principle be able to adapt to changing environments without a complete re-training. |

ANNarchy User ForumHelge Ülo Dinkelbach, Julien Vitay Thu, 11. 4. 2024, 1/368 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Topics will be:

|

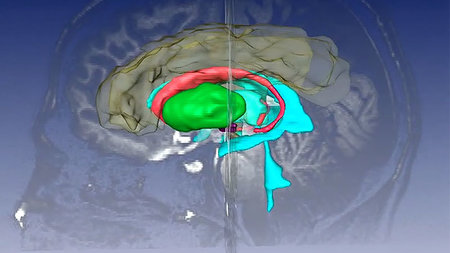

Auswirkungen tiefer Hirnstimulation auf gewohnheitsmäßiges Lernen der BasalganglienDave Apenburg Tue, 12. 3. 2024, 367 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Tiefe Hirnstimulation (DBS) ist eine wirksame Behandlungsmethode, um Bewegungsstörungen bei Dystonie-Patienten zu lindern. In einer Studie von De A Marcelino et al. (2023) wurde dazu untersucht, wie Dystonie-Patienten mit ein- oder ausgeschalteter DBS-Elektrode im Globus Pallidus pars interna (GPi) der Basalganglien (BG) eine Belohnungsumkehraufgabe lösen. In dieser Arbeit wird diese Studie mit dem Basalganglienmodell von Villagrasa et al. (2018) nachgebildet. Da die Auswirkungen von DBS weitgehend unerforscht sind, wurden vier bestehende Theorien (1) Hemmung lokaler Neurone, (2) Stimulation efferenter Axone, (3) Stimulation afferenter Axone und (4) Stimulation vorbeilaufender Fasern eingebunden und untersucht. Aus einer bisherigen Studie (Baladron & Hamker, 2020) geht außerdem hervor, dass eine plastische Verbindung (Shortcut) vom Cortex zum Thalamus gewohnheitsmäßiges Lernen in den Basalganglien unterstützt. Durch einen Wechsel zwischen einem festen und einem plastischen Shortcut konnte diese Aussage validiert werden. Außerdem wurde nach dem Umkehrlernen ein Unterschied in der Anzahl gewohnter Entscheidungen zwischen den DBS-Varianten und eine höhere Anzahl gewohnter Entscheidungen mit eingeschaltetem DBS als ohne DBS festgestellt. Diese Arbeit soll somit zu einem besseren Verständnis des Einflusses von DBS auf die BG-Schaltkreise und gewohnheitsmäßigem Lernen in den Basalganglien beitragen. |

Optimierung der Neuromodellparameter eines Spiking Netzwerkes mit NeuroevolutionTom Maier Thu, 22. 2. 2024, 367 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz In dieser Bachelorarbeit geht es um die Verbesserung der Leistung von konvertierten Spiking Neural Networks (SNNs) um deren besonderen Eigenschaften ausnutzen zu können und gleichzeitiges eine hohe Genauigkeit zu erzielen. Dafür werden bestimmte Parameter der SNNs nach der Konvertierung und Normalisierung durch die Methode von Diehl et al. aus einem Multi-Layer Perceptrons mithilfe der Covariance Matrix Adaption-Evolutionsstrategie (CMA-ES) in einem Evolutionsprozess optimiert. Der Erfolg der Evolution wurde dabei über die Genauigkeit der Klassifizierung auf dem Fashion-MNIST Datensatz gemessen, welcher auch für die Werte des Ausgangsnetzwerks und des rein konvertierten SNN verwendet wurde. Um verschiedene Effekte der Daten auf die Evolution und die Leistung des SNN zu prüfen, wurden verschiedene Konfigurationen der Größe des Datensatzes und der beinhalteten Elemente in einzelnen Durchläufen verwendet. Die Evolution der Parameter ermöglichte eine Verbesserung in den Klassifizierungen auf ein vergleichbares Niveau wie das des ursprünglichen MLP-Modells. Also erfolgte eine starke Verbesserung der Leistung gegenüber des ausschließlich konvertiert und normalisierten SNN. |

Time Series Forecasting of Cashflow Data using Deep LearningPreksha Gampa Mon, 22. 1. 2024, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Time series forecasting is a pivotal technique in the analysis of business operations and resource availability. It is widely used across several industries to predict future events, thereby assisting in crucial and data-driven decision-making processes. At Mercedes-Benz Mobility AG, an application called `myCashflow' is used to provide the daily forecasts of cash positioning for various Mercedes-Benz entities all around the Africa and Asia Pacific (AAP) region. This application currently relies on machine learning and traditional statistical models for the analysis and forecasting of the cashflow data. These models enable the application to capture the inherent patterns present in the time series data and generate high-precision forecasts. However, with the advancement of deep learning techniques, there is a potential for enhancing the forecasting capability of the myCashflow application, thereby assisting in better decision-making. This research focuses on exploring deep learning methodologies for forecasting cashflow data while addressing the challenges of high data fluctuations, short-length time series, and potential outliers. Three advanced deep learning methodologies are explored, namely Convolutional Neural Networks (CNN), Ensemble Empirical Mode Decomposition combined with CNN (EEMD-CNN), and Transfer learning with CNN. A comprehensive evaluation and comparison of the employed deep learning methodologies with established machine learning and statistical models are undertaken to identify the most effective and efficient approach for enhancing the predictive accuracy of cashflow forecasts. |

Erklärbarkeit von Modellen maschinellen Lernens und Anwendung auf die 2. FußballbundesligaSimon Schulze Thu, 18. 1. 2024, 1/309 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Methoden der eXplainable Artificial Intelligence (XAI) sind unabdingbar, um komplexe und undurchsichtige Algorithmen der künstlichen Intelligenz (KI) beziehungsweise des maschinellen Lernens (ML) erklärbar und verständlich zu machen. Zudem werden KI- und ML-Algorithmen immer häufiger im Bereich der Datenanalyse im Fußball verwendet. Diese Seminararbeit untersucht den Einsatz zweier XAI-Verfahren am Beispiel der 2. Fußballbundesliga, um für den Spielausgang ausschlaggebende Statistiken zu identifizieren. Partial Dependence Plots (PDPs) und Shapley Werte sollen erläutert und auf Modellen, welche auf Datensätzen der 2.Fußballbundesliga trainiert wurden, angewandt werden. Die Vorhersagen der Modelle sollen dadurch einen höheren Grad an Erklärbarkeit und Nachvollziehbarkeit erhalten. Mit Hilfe von PDPs kann der Zusammenhang bestimmter Statistiken und des erwarteten Spielausgangs analysiert werden, während Shapley Werte einen Einblick in den individuellen Beitrag von Merkmalen zum Endergebnis ermöglichen. Die gewonnen Erkenntnisse sollen einen tieferen Einblick in die Schlüsselfaktoren, die den Ausgang einer Partie maßgeblich beeinflussen, geben. Diese Forschung trägt zur wachsenden Disziplin der XAI, unter anderem im Bereich der Datenanalyse im Fußball, bei und verdeutlich das Potential, komplexe sportliche Ereignisse systematisch zu entschlüsseln. |

Open-ended optimization of recurrent neuromorphic architecture through neuroevolutionMartina Kraußer Tue, 16. 1. 2024, 1/367 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz While deep neural networks achieve human-level performance in some tasks, their energy consumption if implemented on von Neumann architectures is orders of magnitude above the brain. This motivates research in neuromorphic hardware which has significant less energy consumption by operating neural networks. Frenkel et al. (2018) presented ODIN neuromorphic chip, a digital spiking neuromorphic processor with minimal size and energy consumption. However, its simplified computing architecture does not allow a straight-forward application of gradient-based deep learning techniques. Neuroevolution, a subfield of AI, is employed to train neural networks through evolutionary algorithms, avoiding gradient-based modification of individual weights. This can even reach better and faster results for tasks with high uncertainty about their destination, like playing games or movement control. The Paired Open-Ended Trailblazer (POET) algorithm, introduced by Wang et al. (2019), is surpassing traditional neuroevolutionary approaches that only focus on the adaption from agents in a fixed environment by simulating dynamically changing environments over the time. POET strategically starts with simpler tasks, building skills hierarchically and expediting problem-solving abilities. POET algorithm is used to assess the learning capabilities of the ODIN neuromorphic chip in mastering the game of Pong. The objective is to explore whether the ODIN chip exhibits a general enhancement across various game parameters, contributing to a broader understanding of its adaptability and performance in its adaptability and performance in diverse pong gaming scenarios and therefore in the task of systematically controlling movements. |

Goal-directed control over action in a hierarchical model of multiple basal ganglia loopsManuel Rosenau Thu, 21. 12. 2023, 1/309 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz In the human brain, multiple cognitive functions are related to the prefrontal cortex, the basal ganglia, the thalamus, and their organization into cortical-basal ganglia thalamic-cortical loops. While the prefrontal cortex seems to be associated with goal-directed behaviour through cognitive control mechanisms, the basal ganglia with its dopaminergic inputs and its reward prediction error is often seen as a reinforcement learning circuit, affiliated with habitual behaviour. Goal-directed behaviour is associated with planned, purposeful behaviour, connecting actions to specific outcomes, where habitual behaviour involves actions that are often repeated and rewarded in such ways that stimuli automatically trigger them. There are still uncertainties about the specific mechanisms within the cortical-basal ganglia thalamic-cortical loops that cause a particular behaviour, and whether this can be compared to model-based or model-free behaviours. Baladron and Hamker (2020) proposed a hierarchical organization of basal ganglia loops, where each loop learns using its own prediction error signal and computes an objective for the next loop. They tested their ideas with a computational model consisting of two dorsal loops. Later the model was enhanced by adding a ventral loop prior to the dorsal loops, to select a goal signal out of an existing memory. The present thesis focuses on the question, which internal structures of the cortical-basal ganglia thalamic-cortical loops could be responsible for letting the human brain establish a habit and which ones are responsible for breaking out of habit. As a result, a long indirect pathway and a shortcut between the sensory input and the thalamus in the dorsomedial loop were implemented in the three-loop model. The different approaches were then tested and compared by performing the two-stage Markov Decision task proposed by Gillan et al. (2015). |

Representational alignmentPayam Atoofi Thu, 14. 12. 2023, 1/309 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Any model, or system that serves as an information processing function ultimately provides a state given a stimulus, e.g., image, text, video, etc. These states can then be measured and perhaps mapped to an embedding. Assuming the output space of an information processing function (or in case of an embedding, the embedding space of the process), as its representational space, the extent of similarity or dissimilarity between representational spaces of two seemingly different systems is referred to as representational alignment. There is a plethora of studies in the fields of cognitive science, neuroscience, and machine learning, where representations of the systems are explored to show and/or impose an alignment, under the assumption that there should exist a certain degree of similarity or dissimilarity. Sucholutsky, et al. (2023) have proposed a framework that describes representational alignment in a unified and concise manner, which envelops all the previous methods from the aforementioned disciplines. Their framework helps in not only to have a better overview and understanding of the underlying principle of such approaches and their inspirations, but also to be aware of the challenges and pitfalls, e.g., where representational alignment could hinder the performance, or a representational alignment is not a valid assumption and therefore should be detected. Thus, studies in cognitive science, e.g., behavioral studies, semantic cognition, human-machine alignment, etc., or in neuroscience, e.g., brain activity functions across species or across individuals, multi-modality across brain regions, etc., or in machine learning, e.g., knowledge distillation, interpretability, semantically meaningful representation, etc., could hopefully benefit from any interdisciplinary development of representational alignment. |

Consciousness in Artificial Systems: Bridging Sensorimotor Theory and Global Workspace in In-Silico ModelsNicolas Kuske Thu, 30. 11. 2023, 1/309 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz In the aftermath of the success of attention-based transformer networks, the debate over the potential and role of consciousness in artificial systems has intensified. Prominently, the global neuronal workspace theory emerges as a front-runner in the endeavor to model consciousness in computational terms. A recent advancement in the direction of mapping the theory onto state-of-the-art machine learning tools is the model of a global latent workspace. It introduces a central latent representation around which multiple modules are constructed. Content from any one module can be translated to any other module and back with minimal loss. In this talk we lead through a thought experiment involving a minimal setup comprising one deep sensory module and one deep motor module, which illustrates the emergence of latent sensorimotor representations in the intermediate layer connecting both modules. In the human brain, law-like changes of sensory input in relation to motor output have been proposed to constitute the neuronal correlate of phenomenal conscious experience. The underlying sensorimotor theory encompasses a rich mathematical framework. Yet, the implementation of intelligent systems based on this theory has thus far been confined to proof-of-concept and basic prototype applications. Here, the natural appearance of global latent sensorimotor representations links two major neuroscientific theories of consciousness in a powerful machine learning setup. As one of several remaining questions it may be asked, is this artificial system conscious? |

Untersuchung von Finetuning und Transfer Learning mit Transformer-Architekturen für Altersklassifizierung von TextenSebastian Neubert Mon, 20. 11. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Die vorliegende Masterarbeit untersucht Finetuning und Transfer-Learning für die Altersklassifikation von Texten mit Hilfe von BERT-basierten Modellen. Vier zentrale Forschungsfragen werden durch verschiedene Experimente beantwortet. Zunächst wurde der Einfluss der Chunk-Größe beim Zerlegen des Datensatzes auf die Modellperformance untersucht, wobei größere Chunks eine bessere Gesamtleistung aufwiesen. Die Untersuchung von Klassifikationsschichten zeigte, dass LSTM-basierte Schichten für diesen Anwendungsfall zu besseren Resultaten führen als lineare Schichten. Das Einfrieren von BERT-Schichten während des Trainings zeigte die Bedeutung der Kapazität der Klassifikationsschicht für optimale Genauigkeiten. Schließlich ergab das Transfer-Learning mit zuvor auf verschiedenen Datensätzen trainierten Modellen keine Leistungssteigerung auf dem Audory-Datensatz, aber ein tieferes Verständnis der Vorhersagecharakteristika. Trotz einiger Einschränkungen beleuchtet diese Arbeit die Tiefe und Feinheiten der Altersklassifikation in Texten und liefert wertvolle Einblicke und Grundlagen für zukünftige Forschungen auf diesem Gebiet. |

Replication of Morris et al. 2016 and resolution proposal for prominent seemingly conflicting in vivo measurements of LIP gain-field activityNikolai Stocks Thu, 16. 11. 2023, 1/309 In 2012 Morris et al. produced a set of in vivo direct measurements of the Area LIP, VIP, and MST. When viewed at the population level, these neatly align with observed patterns of perisaccadic mislocalization, as with the broad outline of the model of perisaccadic mislocalization first developed by Ziesche and Hamker. In 2016, they then trained an intentionally simple set of integrators using linear regression on their recorded 2012 dataset, showing that their Dataset allows for the easy and accurate prediction of the future eye position up to 100ms before saccade onset, and the accurate recollection of past eye positions up to 200ms after saccade onset. Meanwhile, Xu et al. 2012 also recorded LIP activity in vivo, providing a set of measurements in contradiction with the recordings of Morris, calling into question the LIP gain-field as a viable explanation for perisaccadic visual stability. I will present one possible resolution for the apparent contradiction between the datasets of Xu et al. 2012 and Morris et al. 2012 while replicating the same kind of prediction and recollection as shown in Morris et al. 2016 using data generated by an iteration of Ziesche and Hamkers model. |

Dataset Refinement for Object Detection by leveraging the Training Dynamics of Deep Neural NetworksSujay Jadhav Mon, 30. 10. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Object detection involves locating the instances of semantic objects in the image and classifying them correctly. The presence of incorrect class labels, incorrect bounding boxes, low resolution, unusual viewpoint, and heavy occlusion harm the detection performance. In large object detection datasets, filtering out instances with these errors is tedious and often requires manual inspection. Our goal is to identify and prune such erroneous and uninformative instances from the object detection datasets. A promising data-centric approach to diagnosing a labeled dataset at the instance level is to monitor the dynamics of the model trained on it. This thesis adopts the dataset maps (Swayamdipta et al., 2020) from text-classification tasks to object detection tasks. By diagnosing the dataset maps for the object detection dataset, this thesis provides a proof-of-concept for object-level pruning of large detection datasets. The position of the objects on the dataset map indicates its difficulty for the model in three categories - easy, ambiguous, or hard to learn. To further filter out the object instances that do not contribute to learning, other training dynamics such as correctness - how often the object is correctly classified, and forgetfulness - how often the model misclassifies the object after correctly classifying it, are explored to find out their potential for object pruning. The thesis shows that collectively pruning some easy and most of the hard-to-learn objects can improve the detection performance of the model, with less data. |

Predicting Remaining Useful Lifetime of Solenoid Valves Using Machine Learning TechniquesSyed Anas Ali Mon, 25. 9. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz FESTO VYKA valve is used in many industries and plays a pivotal role in industrial control, automation and pneumatics. These valves degrade with time and their usage. This thesis uses artificial intelligence and deep learning to research and find methods to predict the Remaining Useful Life (RUL) in the number of switches remaining until failure. RUL prediction is extremely useful in the predictive maintenance domain. It is a crucial concern in prognostic decision-making and saves costs and time. RUL is calculated using only measurements from current and voltage sensors, and PCA-based cascaded LSTMs and convolutional LSTM-based approaches are compared. The data for this thesis was gathered from endurance tests explicitly designed for the failure of these valves. Convolution kernel integrated with cascaded LSTMs yielded better RUL estimations than the PCA-based deep learning approaches. Two-dimensional convolutions were able to capture the features of ageing valves, and they gave the best results when these were integrated with cascaded LSTM. Separating the failure types improved the RUL estimations. However, current RUL estimations could be improved by gathering more valve data and generalising even more. |

Klassifizierung von manuellen ACC-Abbrüchen im Fahrzeugtest mittels Deep LearningVictoria Nöther Wed, 13. 9. 2023, 1/336 und https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Fahrerassistenzsysteme werden zunehmend im Straßenverkehr eingesetzt. Sie müssen zuvor allerdings vollumfänglich getestet werden. Viele dieser Tests müssen im Fahrzeug stattfinden, da spontane menschliche Fahrentscheidungen nur schwer simuliert werden können. Fahrzeugtests sind allerdings sehr kosten- und zeitaufwendig. In dieser Arbeit wird untersucht, wie menschliche Fahrentscheidungen automatisiert klassifiziert werden können. Diese Arbeit konzentriert sich nur auf den Abstandsregeltempomat und dessen manuelle Abbrüche, welche durch den Fahrer durchgeführt werden. Es werden dazu Daten aus dem Fahrzeugtest zum Trainieren von Deep Learning-Modellen verwendet. Dazu werden fünf Deep Learning-Modelle untereinander verglichen: eindimensionale Convolutional Neural Network, Long Short-Term Memory, Gated Recurrent Unit und deren Kombinationen. Es wird ein F1-Score von 93,88% für das Convolutional Neural Network-Modell erzielt. Die anderen Modelle erzielen F1-Scores von 94,57%, können allerdings Abstandsregeltempomat-Abbrüche nicht korrekt prädizieren. Das Convolutional Neural Network-Modell ist als einziges erfolgreich in der korrekten Klassifizierung der Abbrüche, was anhand seiner Confusion Matrix abzulesen ist. Diese Arbeit zeigt, dass der Einsatz von Deep-Learning Methoden Erfolg bei der Klassifizierung menschlicher Fahrentscheidungen haben kann. |

Parkinsonian beta oscillations in the pallido-striatal loop influencing action cancellationIliana Koulouri Wed, 16. 8. 2023, 1/273 und https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Die Parkinson-Krankheit (PD) ist eine neurodegenerative Erkrankung, welche durch demVerlust von Dopamin-Neuronen verursacht. Unter dopaminarmen Bedingungen, wie PD, tritt synchronisierte oszillatorische Aktivität im Beta Frequenzband in den Basalganglien (BG) auf. Diese werden wiederum mit der Kontrolle motorischer Handlungen assozziiert. Die Quelle dieser Oszillationen ist bis heute unbekannt. Neben den Theorien zur Generation von Beta Oszillationen in der STN-GPe Schleife, dem Striatum oder dem Kortex. Die pallido-striatale Schleife besteht aus GPe Neuronen, schnell spikenden striatalen Interneuronen (FSIs) und STRD2 Neuronen und ist ebenfalls eine weitere mögliche Quelle von Beta Oszillationen bei PD. Corbit et al.(2016) zeigten, dass ein Modell der pallido-striatalen Schleife fähig ist unter dopaminarmen Bedingungen Beta Oszillationen zu erzeugen und zu verstärken. Dieser Ansatz ,sowie ein biologisch plausibles FSI Neuronenmodell wurden in das exisistierende BG Modell (Gönner et al, 2020) übertragen, um zu testen, wie sich Beta-Oszillationen im gesamten BG Netz ausbreiten können und welchen Einfluss sie auf die Performance in einer Stopp Signal Aufgabe haben. Nach aktuellem Arbeitsstand scheint es als setzen sich nicht Beta, sondern eher Theta Oszillationen im Netz fort. |

Berechnung des Brain-Scores für biologisch motivierte neuronale NetzeElina König Tue, 8. 8. 2023, 1/273 Der Brain-Score ist eine metrische Bewertung, die die Leistung neuronaler Netzwerke im Vergleich zum menschlichen Gehirn quantifiziert. Die Online-Plattform Brain-Score.org spezialisierte sich auf diese Bewertung und den Vergleich von Netzen. Die Forscher ermöglichen es Modelle hochzuladen, dabei wird die Leistung der Einreichungen anhand von verschiedenen Benchmark-Aufgaben bewertet. Sie umfassen typische visuelle Reize, auf die das menschliche Gehirn reagieren kann. Der Fokus der Arbeit liegt auf der Fähigkeit des ANNarchy-Modells, neuronale Reaktionen auf visuelle Reize vorherzusagen, die in den frühvisuellen Arealen beobachtet wurden. Das Netz wird in Hinblick auf seine Vorhersagegenauigkeit mit bereits eingereichten Modellen wie AlexNet, VGG16 und ResNet verglichen. Die Untersuchung zeigt Einblicke in die Grenzen und Herausforderungen der Modellierung und mögliche Strategien zur Verbesserung der Leistung. |

Entwicklung eines graphbasierten Empfehlungssystems basierend auf Kunde- Produkt-Beziehungen und unter Verwendung einer Big Data PlattformHaitham Almoughraby Mon, 24. 7. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Der bipartite Graph ist eine verbreitete Struktur, um eine Beziehung zwischen zwei Populationen herzustellen und wurde zuletzt für persönliche Empfehlungen verwendet. Die Ein-Modus-Projektion- Methode ist dazu geeignet, eine Ebene aus dem bipartiten Graphen zu projizieren, sodass man einen Graphen erhält, der nur aus einer Knotengruppe besteht. Dessen Kanten entstehen zwischen den Knoten, wenn bestimmte Voraussetzungen erfüllt sind, z. B., wenn zwei Knoten im originalen Graphen mindestens mit einem Knoten aus der anderen Ebene verbunden sind, die als ähnliche Nachbarn bezeichnet werden. Da durch diese Projizierung viele Informationen verloren gehen, ist eine Gewichtungsmethode notwendig, um so viele originale Informationen wie möglich beizubehalten In dieser Masterarbeit wurden unterschiedliche Algorithmen verglichen, die bei der Projektion gewichtete Ein-Modus-Graphen erzeugen. Basierend auf diesen Graphen wurde ein Empfehlungssystem entwickelt, das sich nur auf die Beziehungen zwischen den Produkten fokussiert, und es wurde dessen Güte gemessen. Da die Struktur des Graphen eine zentrale Rolle spielt, wird der Einfluss des Gewichts auf den Graphen beobachtet und die Community-Detektion des Graphen überprüft. |

Generalized Event Discovery with end-to-end learning for Autonomous DrivingChaithrashree Moganna Gowda Fri, 14. 7. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Safety conformity is one of the major requirement for Autonomous Driving, and even with continuous improvement in AI technologies this requirement is a must before deploying the vehicle on roads in an open context. To increase safety assessment and react in a timely manner to external events, the vehicle must know what kind of situation it is currently in. Hence it is important to analyze the series of events happening in the surroundings of the driving environment. An already existing approach for this problem of Time-to-Event prediction i.e., to predict the events even before they occur is Future Event Prediction in the video data (Neumann et al., 2019). In this paper, two key challenges were addressed: whether a certain type of event is happening, such as a car stopping, and if so, when the event is going to happen. In the past, this state-of-the-art work on Event Prediction was extended to a set of event classes such as Sudden Brake, Turn, and Anomaly. In this work, the data was prepared in a Weakly Supervised approach before the model development, by using synchronized sensor measurement data. For every similar task mentioned above, there is a huge amount of real-world driving data available, collected with various sensors in an autonomous vehicle. To effectively utilize this collected data, it is necessary to interpret the elements in the data to categorize or label them. Labelling this data for Autonomous Driving is a tedious process. The aim is to utilize this unlabelled data to improve the artificial intelligence algorithms developed for Autonomous Driving. Hence there is a necessity to move from a Weakly Supervised approach to Semi-Supervised Learning to analyze the series of events. A new approach called Generalized Category Discovery (GCD) (Vaze et al., 2022), wherein given a labelled and unlabelled set of images, the task is to categorize all images in the unlabelled set. The unlabelled images may come from labelled classes or from novel ones. This existing state-of-the-art approach of Generalized Category Discover is adapted as a baseline for Generalized Event Discovery (GED) i.e., to categorize unlabelled video sequences. The main contribution of this work is to discover various known and unknown event classes in unlabelled data set. A 3D ConvNet architecture used in Future Event Prediction has been used as a backbone in this experiment to extract the feature vector for the events. An Event Estimation model designed to categorize different event class is also been used as a backbone to extract the feature vector for the events and a comparison between these two models are made. Clustering has been directly performed in the feature space, using an effective Semi-Supervised K-means++ Clustering method introduced in GCD to cluster the unlabelled data into known and unknown classes automatically. The proposed Generalized Event Discovery (GED) method has been evaluated on the standard BDD100k benchmark dataset and on the real-time in-house ADAS data. |

Surround delay leads to spatiotemporal behavior in the early visual pathway - part 2René Larisch Tue, 11. 7. 2023, 1/375 The spatial receptive fields along the early visual pathway - from the retinal ganglion cells (RGC) over the lateral geniculate nucleus (LGN) to the primary visual cortex (V1) - are not fixed but have shown a change of their structure during a short time interval. This temporal behavior adds the time dimension to the 2D spatial receptive field and leads to spatiotemporal receptive fields (STRF), suggesting processing for time-relevant visual stimuli, like the directional movement of an object. Different studies on cats and mice have shown that STRFs can differ in their appearance from a more separable to a more inseparable characteristic. Despite the fact, that there exists a vast amount of models to simulate STRFs and investigate their functionality (especially for direction selectivity), most of these models use a non-linear function to implement the change of the spatial receptive field over time, not explaining how STRFs emerge on a more neuronal level. Additionally, recent models indicate a more important role for intercortical inhibition for direction selectivity. In this talk, I give a short overview of different V1 simple cell models of STRFs and direction selectivity. Further, I will show how a delay in the surrounding field of the RGCs, an assumption reported previously, leads to STRFs in the LGN and the V1/L4 simple cells and how the characteristics of STRFs are influenced by so-called lagged LGN cells. At the end of the talk, I will discuss how direction selectivity is influenced by lagged LGN cells and intercortical inhibition. |

Surround delay leads to spatiotemporal behavior in the early visual pathwayRené Larisch Tue, 6. 6. 2023, 1/375 The spatial receptive fields along the early visual pathway - from the retinal ganglion cells (RGC) over the lateral geniculate nucleus (LGN) to the primary visual cortex (V1) - are not fixed but have shown a change of their structure during a short time interval. This temporal behavior adds the time dimension to the 2D spatial receptive field and leads to spatiotemporal receptive fields (STRF), suggesting processing for time-relevant visual stimuli, like the directional movement of an object. Different studies on cats and mice have shown that STRFs can differ in their appearance from a more separable to a more inseparable characteristic. Despite the fact, that there exists a vast amount of models to simulate STRFs and investigate their functionality (especially for direction selectivity), most of these models use a non-linear function to implement the change of the spatial receptive field over time, not explaining how STRFs emerge on a more neuronal level. Additionally, recent models indicate a more important role for intercortical inhibition for direction selectivity. In this talk, I give a short overview of different V1 simple cell models of STRFs and direction selectivity. Further, I will show how a delay in the surrounding field of the RGCs, an assumption reported previously, leads to STRFs in the LGN and the V1/L4 simple cells and how the characteristics of STRFs are influenced by so-called lagged LGN cells. At the end of the talk, I will discuss how direction selectivity is influenced by lagged LGN cells and intercortical inhibition. |

Demonstration von offline Reinforcement Learning auf einem eingebetteten SystemRobin Gerstmann Fri, 2. 6. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz In dieser Arbeit wird die Herausforderung von Offline Reinforcement Learning auf einem eingebetteten System demonstriert. Demnach wird in einer Simulation ein Offline Reinforcement Learning Algorithmus antrainiert und später auf die eingebetteten Plattform überführt. Die Aufgabe besteht darin, dass eine KI-gesteuerte Kugel (über Bluetooth), eine andere treffen soll. Demnach gibt es ein begrenztes Feld indem die Kugel agieren kann. Damit die Aufgabe für den Offline Reinforcement Learning Algorithmus herausfordernder ist, werden zwei Hindernisse mit eingebracht. Um die Ausführung auf den vorhanden realen Demonstrator zu gewährleisten, muss ein YOLO Version 7 trainiert werden. Dieser stellt die Informationen für Offline Reinforcement Learning Algorithmus bereit. Demnach soll die Machbarkeit und Verbund verschiedener KI-Algorithmen auf einem eingebetteten System demonstriert werden. |

Generalization Ability of Crop State Classification Models in Harvesting ScenariosPreethi Venugopal Fri, 26. 5. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Neural networks have achieved remarkable progress in various fields of application. In the real-world scenario, it is of the greatest importance to estimate the capabilities of the deep learning models and algorithms to generalize and predict accurately the unseen data. Good generalization performance is the fundamental goal of any algorithm. Developing a generalized model is very critical because it has to predict accurately the data that is almost related to the training data. In this thesis work, the classifier's ability to generalize is measured for the FLP (Forward Looking Perception) system developed by John Deere for combined harvesters. ResNet architecture is chosen to determine and classify the states of the crop (down crop and standing crop) ahead of the machine. It is to be studied how well the classifiers for down crop detection generalize for various crops and regions. To determine the generalization ability of the model, dataset-based investigation and hyperparameter tuning methods are chosen. Various experiments are carried out on crops such as wheat and barley from different regions. The collected data contains images that are not directly fed into the neural network, instead, the images are broken down into patches and labeled. Labeled data is taken from the existing database. These patches are used for further training. Several basic data sets are prepared, in each one aspect of variation is fixed. For example, the crop type is fixed to wheat but the region varies. This creates data from each region. Also, combining two to three different regions will add more variety to the training. A series of training & testing on various data sets is performed and the generalization ability is evaluated using a confusion matrix and mainly by comparing the F-score for down crop. This thesis mainly investigates the generalization ability of such classifiers. |

Perturbation Learning in the Context of Reservoir ComputingMax Werler Tue, 16. 5. 2023, 1/375 Over the last decades, a variety of different training approaches for recurrent neural networks have been proposed along with slightly differing network architectures. One category of learning approaches is addressable under the name of perturbation learning. Instead of manually calculating the gradient of a network and backpropagating an error or reward signal, perturbation learning tries to approximate the gradient by adding random noise to the nodes and/or weights of the network and observing the change in the performance. Depending on that performance change, some degree of the noise will be committed permanently to the network. This presentation briefly introduces reservoir computing and takes a deeper look at recent studies regarding perturbation-based learning approaches. Related results as well as own experimental findings will be presented too. In the latter, Miconi's advance of node perturbation was compared with the weight perturbation strategy of Zuege et. al. by challenging accordingly implemented networks to solve the Delayed-Nonmatch-To-Sample task and control a simulated robot arm. The own findings align with the results of Zuege et. al. and demonstrate the ability of weight perturbation to keep up with and even outperform node perturbation. |

Few Shot Learning for Material Classification at Drilling MachinesRohina Roma Dutt Thu, 4. 5. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz In this study, we investigate the application of few-shot learning (FSL) techniques for material classification in drilling machines, utilizing time series data. The lack of labeled data and difficulties in data preparation pose significant challenges, which were addressed by employing basic and advanced preprocessing techniques, along with a specialized data generator function. The research question focuses on identifying the optimal FSL approach for material classification in drilling machines. To this end, a range of models, including baseline models and Prototypical Networks, were evaluated. The experimental setup was conducted in an industrial environment for drilling operation, and the results showed that the hybrid model, a combination of 1DCNN and LSTM layers, outperformed other models. The Proto-Hybrid Model was compared to a baseline model based on the mean average precision (mAP) metric, which demonstrated the superiority of the proposed model. This work contributes to the field of FSL by highlighting the potential benefits of incorporating hybrid model structures in future research for material classification tasks. This research underscores the importance of exploring and comparing different FSL approaches to determine the best one for a given dataset. Further research should address limitations and explore alternative FSL methods to improve model performance in material classification for drilling machines and other industrial applications. The Protohybrid model provides a valuable contribution to the field of material classification, showing promising results and offering the potential for improving accuracy with more shots examples. |

Evaluation and Implementation of 3D Container Problem Algorithms Based on Artificial IntelligenceShubhangi Bhor Fri, 17. 3. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz 3D container packing optimization is one of the main problems in the logistics industry. Tons of packages or units need to be packed into the container to provide a smooth process for the production industry. It indirectly helps the environment by reducing the CO2 emission due to less number of containers on the road by optimizing container packing. Different bin packing algorithms help to solve this NP-hard problem, such as branch and bound, branch and cut, greedy algorithm, heuristic algorithm, and machine learning models. This master thesis helps to tackle a few of the problems, such as the reduction of the number of containers as well as costs by implementing and evaluating different artificial intelligence techniques. |

Application Of Virtualization Concepts in Linux Distributed Industrial Controllers For RoboticsSuryakiran Suravarapu Thu, 9. 3. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz The emergence of technologies such as IoT and Cloud has revolutionized the way data is stored, distributed, and processed. The development of applications has shifted from core legacy applications to portable and distributed microservices, bringing virtualization and containerization concepts to the forefront for building scalable mobile applications. Despite this, industrial robotics have not fully utilized these concepts due to constraints such as real-time requirements and application robustness, as they are limited to the control plant. Our research proposes an architecture that reduces deployment time and hardware resource usage by creating robust and portable ROS application packages as microservices through containerization and virtualization concepts. Additionally, we propose an orchestration tool to monitor and manage these containers while ensuring real-time constraints are met by running the ROS application packages on a real-time kernel. Furthermore, our research addresses seamless integration and deployment (CI/CD) in an offline (air-gapped) environment. |

A comparison of machine learning and computer vision approaches for row crop segmentationKundan Kumar Agnuru Fri, 24. 2. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Agriculture is one of the essential practices for survival and progress of humanity. Application of modern technologies in agricultural practices leads to analysis of plant characteristics increase in productivity. Row crop segmentation is one of the approaches in precision agriculture that helps in analyzing and evaluating features of plants and segment into regions based on characteristic similarities. Row crop segmentation helps in guidance of semi-automated or autonomous vehicles in between crop rows during spraying and harvesting applications. The purpose of this study is to evaluate different approaches based on unsupervised and computer vision techniques for row crop segmentation. It is accomplished by using Multi-spectral images as a data source. Multispectral images processed by application of filters to remove noise. Outcomes of filtering analyzed subjectively to evaluate for suitable filter. Extraction of region of interest and vegetation indices are calculated using denoised multispectral images. Out of calculated vegetation indices, the best vegetation indices stacked together and used as input for unsupervised and computer vision segmentation approaches. Evaluation of segmentation maps are done by applying line detection algorithm and comparing the results using Intersection over Union metric. |

Modellierung der perisakkadischen Mislokalisation unter Berücksichtigung der visuellen AufmerksamkeitNikolai Stocks Wed, 22. 2. 2023, Room 309 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Menschliche visuelle Wahrnehmung ist kein passiver Prozess, bei welchem Stimuli durch Sinnesorgane gemessen werden, sodass die Summe dieser Messungen unsere erlebte Umwelt erzeugt. Es handelt sich stattdessen um ein Zusammenspiel aus aktiven und passiven Prozessen. Antworten von Probanden in bestimmten Experimenten lassen wiederum Schlüsse auf die internen Dynamiken und Eigenschaften dieser Prozesse zu. Von besonderem Augenmerk sind hier Experimente, welche die Systematik von Fehllokalisationen untersuchen. Für diese Arbeit wurde das Modell von Ziesche and Hamker (2011) um ein an die Sakkade gekoppeltes räumliches Aufmerksamkeitssignal erweitert, um zu Versuchen den von Georg et al. (2008) beobachtenden Einfluss des Stimulus-Kontrasts auf perisakkadische Fehllokalisation zu replizieren. Des Weiteren wurde das Modell durch einen neu strukturierten zweistufigen Prozess der Integration und Auswertung von sensorischer Information erweitert, welcher sich in anderen ähnlichen Modeiterationen bereits bewährt hatte. Es zeigte sich, dass diese neue Quelle der visuellen Aufmerksamkeit in der Lage war, die Art des von Georg et al.(2008) beschriebenen Effektes zu replizieren, jedoch alleine nicht ausreicht, um das in Menschen beobachtete Verhalten vollständig zu erklären. |

Improving industrial object recognition through fusion of physical propertiesMaulik Jagtap Thu, 16. 2. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Classification of industrial parts is a very challenging and time-consuming task. Because of this problem, every year, seven percent of industrial parts end up in waste which can be re-manufactured. The advent of machine learning makes it possible to classify industrial parts. However, since only an image is used to train the model, separating objects into their respective classes is difficult because all objects look similar. As every object has physical properties such as weight and height, this study aims to determine whether an object's physical properties fuse with the image as an extra feature will make a model more robust and improve accuracy for better classification. Based on the review of literature on the fusion of models. Multi-model fusion can help achieve the goal. Different modalities can be merged to create features. Differ- ent types of fusion include early fusion, late fusion, and hybrid fusion. The image extracts some individual features of the object, and physical properties are numerical, so various encoding techniques are used, such as standard deviation, one-hot encoding, and sin-cos encoding, to fuse the image and physical properties at classification time. Combined image and object physical properties improve model accuracy, and sin-cos encoding gives better results than other encoding methods. |

Central Pattern Generators in robot controlJoris Liebermann Thu, 9. 2. 2023, Room 1/367a and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Central Pattern Generators (CPGs) are neural circuits that play a crucial role in the coordination of movement in vertebrates. They allow for the generation of rhythmic and non-rhythmic movement patterns without the need for input from higher cortical areas and provide a mechanism for adaptive and recovery behaviors. The use of CPGs in robot control offers a biologically inspired approach to low-level control with the potential to generate a wide range of movement patterns. By extending mathematical models of CPGs, researchers have developed a Multi-layered Multi-pattern CPG (MLMP-CPG) architecture that can generate rhythmic and non-rhythmic patterns with only a few parameters. This MLMP-CPG model has shown promising results as a low-level controller for robots. It provides a flexible and adaptive solution to the problem of movement coordination in robotics which is demonstrated by examples of a humanoid robot in the context of walking and drawing. |

A Neural-Gas inspired Spiking Neural NetworkLucas Schwarz Thu, 9. 2. 2023, Room 1/367a and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Machine Learning models based on Teuvo Kohonens original work on Self-Organizing Maps and Learning Vector Quantizers still remain a credible alternative to commonly used Neural Networks in cases, where interpretability, decision stability as well as explainability are desired and constrained hardware is being used. In recent years, there have been investigations into utilizing alternative computational paradigms and hardware like Quantum Computing and Neuromorphic processors enabling the efficient use of Spiking Neural Networks (SNNs). To shed additional light into the capabilities of principles which guide SNNs, an adapted version of the unsupervised 'Neural Gas' algorithm has been developed, which is mainly used for lossy data compression as well as clustering due to its favourable responses to differences in data density. Using Spiking Neurons and Spike Timing Dependent Plasticity (STDP) requires a coding for data, which is usually available as float-valued vectors. The presented coding, based on previous work from Bohte et.al., transforms those vectors into spike delay times using spatially distributed fixed radial basis functions and is used to adapt the Neural Gas cost function, which generates an explicit learning rule, implicitly into the architecture of the developed model. First usage results and observations regarding stability and parallel developments are also considered in this presentation. |

Self-Supervised Pretraining for Robotic Bin Picking by Using Image SequencesRalf Brosch Wed, 1. 2. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Robotic bin picking in warehousing has the advantage of reducing laborous and monotonic work. Therefore, the object detection systems of the bin picking robots have to be reliable and accurate. However, oftentimes data has to be labeled manually, to train instance seg- mentation models and achieve high accuracy for object detection. To overcome the costly and time-consuming process of labelling, in this thesis three different Self-Supervised Learning (SSL) methods are presented, which make use of automatically generated labels. The labels are created according to the specific requirement of the pretext task. The first method uses warehouse data to classify images between different articles. The second method generates an instance label for every successful pick. And the third method uses the temporal aspect of the bin picking data to classify the ordering of images. All three proposed pretext tasks take advantage of the bin picking procedure itself to automatically generate the required labels. Each pretext task pretrains the ResNet-50 backbone of a Mask R-CNN instance segmentation model, to improve the instance segmentation in the downstream task. The goal of this procedure is to learn features in the backbone, which are useful for the downstream task. The results show that the first and third methods are not useful for pretraining an instance segmentation backbone. However, the second method manages to improve the backbone and increases performance by 7% for articles without manually labeled data. On the other side, it also decreases the performance by around 2.5% for articles, where manually labeled data is available. The findings in this thesis show that image sequences can successfully be used to generate labels for SSL models and that the pretraining of a Resnet-50 backbone with a proper pretext task can lead to a performance improvement in the downstream task of instance segmentation. |

ECG-based Heartbeat Classification in the Neurosimulator ANNarchyAbdul Kampli Thu, 19. 1. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz ElectroCardioGram (ECG) measurements, which are frequently used to detect cardiac disorders due to their non-invasive nature, can be used to monitor heart function. Cardiologists with the right training can identify irregularities by visually reviewing recordings of the ECG signals. Arrhythmias, however, can be missed in routine check recordings because they happen sporadically, especially in the early phases. Spiking Neural Network (SNN) has a Dynamic Characteristics. Due of this, it is excellent at using dynamic processes. A cluster of integrate and fire (IF) neurons that have been supervisely trained to differentiate between 2 types of cardiac rhythms makes up the two stages Spiking Neural Network (SNN) architecture, which consists of a recurrent network or reservoir of spiking neurons that whose output is classified into different classes. To activate the recurrent SNN, we present a technique for encoding ECG signals into a stream of asynchronous digital events. We go through the issue of supervised learning in multi-layer spiking neural networks that can encode time. In order to train multi-layer networks of deterministic integrate and-fire neurons to execute non-linear computations on spatiotemporal spike patterns, we first design SuperSpike, a nonlinear voltage-based three factor learning rule. On the PhysioNet Arrhythmia Database given by the Massachusetts Institute of Technology and Beth Israel Hospital (MIT/BIH), we demonstrate an overall classification accuracy of 90-92%. A neural simulator created for distributed rate-coded or spiking neural networks, ANNarchy (Artificial Neural Networks Architect) has been used to develop the suggested system. |

Embedded Neuromorphic Computing at the FZI Forschungszentrum InformatikBrian Pachideh Wed, 11. 1. 2023, https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Neuromorphic engineering takes inspiration from the brain to develop novel processing methods and technologies that enable efficient machine intelligence; its key technology are Spiking Neural Networks (SNNs) for the processing of event-data streams. The field of neuromorphic engineering has recently seen a surge in research due to recent developments. One example is the emergence of ultra-low power neuromorphic hardware architectures for the acceleration of SNN, and another is the commercialization of the first neuromorphic sensor, the event-based camera, which serves as a native source of event-data streams. At the FZI Research Center for Information Technology, we are actively exploring the potential of neuromorphic engineering through a variety of publicly funded projects, our current application domains include smart health, smart city and automotive. Our research focuses on the implementation of event-based computing and communication hardware on FPGA, as well as their integration in embedded systems. This seminar serves as an introduction to the field of embedded neuromorphic engineering. It will cover the fundamentals of SNNs, give a technical overview of SNN hardware acceleration and event-cameras, provide open source tools for SNN development and finally highlight related projects at FZI. |

Meta-Learning and Neuroevolution - part 2Valentin Forch Wed, 7. 12. 2022, Room 1/368a and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz To build neural network models, researchers rely on their intuitions, reasoning, or knowledge about what worked best for other people with similar problems. However, most often, it is not clear which exact model topology, hyperparameter set, or training schedule will give satisfactory results. This can lead to a laborious cycle of model adjustments and evaluations. Moreover, a good model may be too complex to be even considered by humans. The past decade has shown that doing away with expert knowledge and automating the process of knowledge extraction with deep learning can give tremendous results, e.g., when learning the game of Go without any human supervision. Considering the exponential growth of available computing power, it appears logical to forego the manual design of neural network models and instead use parallelizable optimization algorithms. The main classes of these meta-optimizers are meta-learning and evolutionary search. Both enable the optimization of virtually every part of a learning system, opening up new spaces for building more powerful and also biologically more plausible neural networks. |

Deep Neural Networks and Implicit Representations for 3D Shape DeformationAida Farahani Wed, 30. 11. 2022, Room 1/368a and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Geometric deep learning is a promising approach to bring the power of deep neural networks to 3D data. Explicit 3D representations such as meshes are not easily combined with neural networks as there is no unique mesh to represent a single geometry. Another explicit form, point clouds, as an unordered set of points sampled on the surface, can have a varying and often huge number of dimensions that limits their use as an input to a neural network. On the contrary, Implicit representations such as signed distance functions (SDF) define a 3D shape as a continuous function that could be approximated by a deep neural network. The continuous property of implicit representations causes the algorithm to be independent of the size and topology of the shapes. In this talk, I demonstrate how deep neural networks, along with implicit representations, can be used to precisely predict the deformation of a material after the application of a specific force. The model is trained using a set of custom finite element simulations to generalize to unseen forces. |

Investigation of interpretability mechanisms for heterogeneous data using machine learningGerald Meier Mon, 28. 11. 2022, Room B006 and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz In order of a wider acceptance of artificial intelligence methods, the powerful models developed over the last years needed to improve the transparency of their decision making (Adadi & Berrada, 2020). Due to the impact which biased data can have on the prediction made by a model, criticism of the opaqueness of the popular AI methods arose (Mattu, Angwin, Larson, & Lauren Kirchner, 2016). The term Explainable AI (XAI) was coined and has been a growing research field since. There are different approaches and XAI algorithms for different models and different kinds of data. The type of data most used and produced for business and commercial processes is structured data. It is formatted in tabular shape and the data it is composed of, is distinguished into categorical and numerical values. Unexpectedly, neural network models which do excel with unstructured data, did not perform complainingly well on structured data, with the data heterogeneity being a particular issue (Borisov et al., 2022). The thesis analyses how data heterogeneity, and other connected properties affect the selection of XAI methods. The most relevant properties besides heterogeneity are identified as a prerequisite and a foundation for the implementation of a prototype program. This program takes in a dataset and auxiliary parameters puts out a recommended XAI method. |

Meta-Learning and NeuroevolutionValentin Forch Wed, 23. 11. 2022, Room 1/368a and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz To build neural network models, researchers rely on their intuitions, reasoning, or knowledge about what worked best for other people with similar problems. However, most often, it is not clear which exact model topology, hyperparameter set, or training schedule will give satisfactory results. This can lead to a laborious cycle of model adjustments and evaluations. Moreover, a good model may be too complex to be even considered by humans. The past decade has shown that doing away with expert knowledge and automating the process of knowledge extraction with deep learning can give tremendous results, e.g., when learning the game of Go without any human supervision. Considering the exponential growth of available computing power, it appears logical to forego the manual design of neural network models and instead use parallelizable optimization algorithms. The main classes of these meta-optimizers are meta-learning and evolutionary search. Both enable the optimization of virtually every part of a learning system, opening up new spaces for building more powerful and also biologically more plausible neural networks. |

Detecting anomalies in system logs with a compact convolutional transformer - part 2René Larisch Wed, 9. 11. 2022, Room 1/368a and https://webroom.hrz.tu-chemnitz.de/gl/jul-2tw-4nz Computer systems play an important role to ensure the correct functioning of critical systems such as train stations, power stations, emergency systems, and server infrastructures. To ensure the correct functioning and safety of these computer systems, the detection of abnormal system behavior is crucial. For that purpose, monitoring log data (mirroring the recent and current system status) are used very commonly. Because log data consists mainly of words and numbers, recent work used transformer-based networks to analyze the log data and predict anomalies. Despite their success in fields such as natural language processing and computer vision, the disadvantages of transformers are the huge amount of trainable parameters, leading to long training times. In this talk, I will present how a compact convolutional transformer can be used to detect anomalies in log data on two common log datasets from the supercomputers Blue Gene/L and Spirit. Using convolutional layers reduces the number of trainable parameters and enables the processing of many consecutive log lines. Our results demonstrate that the combination of convolutional processing and self-attention improves the performance for anomaly detection in comparison to other transformer-based approaches. At the beginning of my talk, I will give a short introduction to transformer networks before the presentation of the log data analysis. |